Introduction

We built the Small Language Model RAG Arena, a community-driven evaluation platform inspired by LMSYS's Chatbot Arena that compares SLMs (under 5B parameters) on document-based question answering tasks through blind A/B testing.

Our work with automated evaluation methods showed that metrics and LLM judges have room for improvement in assessing real-world performance. Human feedback is crucial for calibrating these judges on subjective quality assessment, ensuring the automated evaluator captures what users truly value in RAG responses.

Unlike the chatbot arena that tests general capabilities, our arena focuses on specific applications. This also reflects how SLMs shined in the open-source community and real-world deployment: not by doing everything adequately, but by excelling at specific tasks.

By examining how models handle summarization (or generation) from query and retrieved context, we aim to pinpoint exactly why many SLMs struggle with RAG tasks. This will help us to make a better automated evaluator and figure out what is the correct path to make a good sub 5B summarizer in a local RAG system.

What is the SLM RAG Arena?

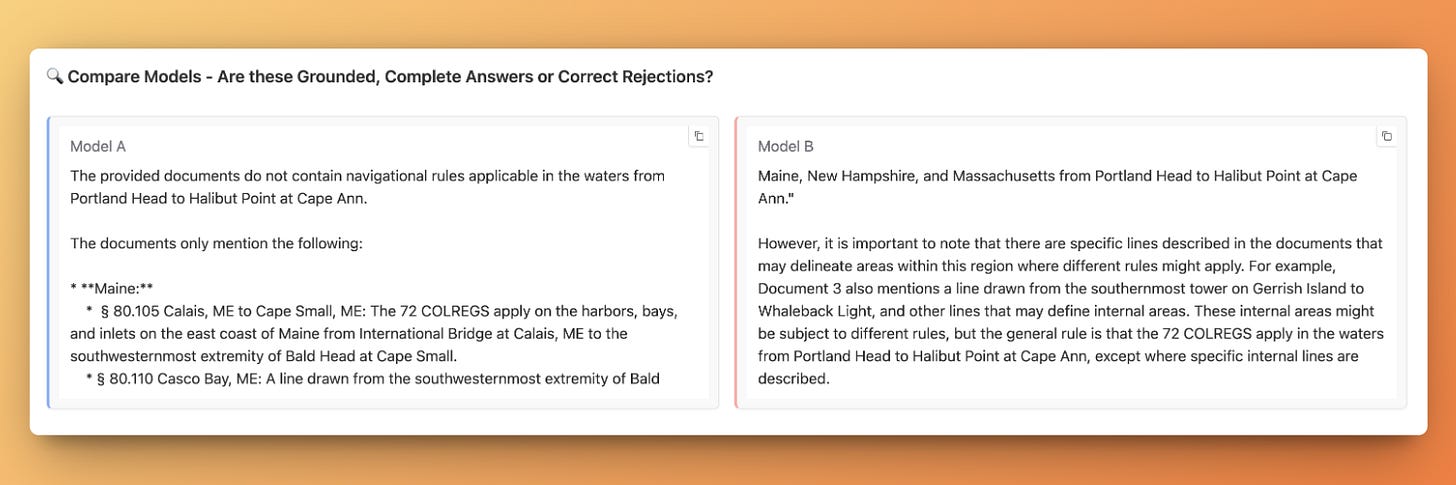

Like chatbot arena, the SLM RAG Arena lets people compare sub-5B language models head-to-head on RAG tasks. Our blind A/B testing interface randomly selects two models to generate answers to the same question using identical context, then presents both responses anonymously to prevent brand bias.

We deliberately selected questions and documents from real-world applications across 10 topics (including technical documentation, policies, legal cases, and manuals). We also intentionally include examples where the context lacks sufficient information or the question is ambiguous or overly broad. This captures the messiness of real-world RAG use cases.

The experiment is specifically designed to evaluate SLMs as summarizers:

Question and context display: Users see a question about document content alongside retrieved text chunks, mimicking what an SLM would receive in a production RAG pipeline.

Highlighted important context: We identify and highlight passages that a high-quality LLM used in generating a reference answer. This makes evaluation more efficient by drawing attention to critical information.

Reference answers available: Below model responses, we include a reference answer generated by a larger LLM. This is folded by default to prevent initial bias, but can be expanded to help with difficult comparisons.

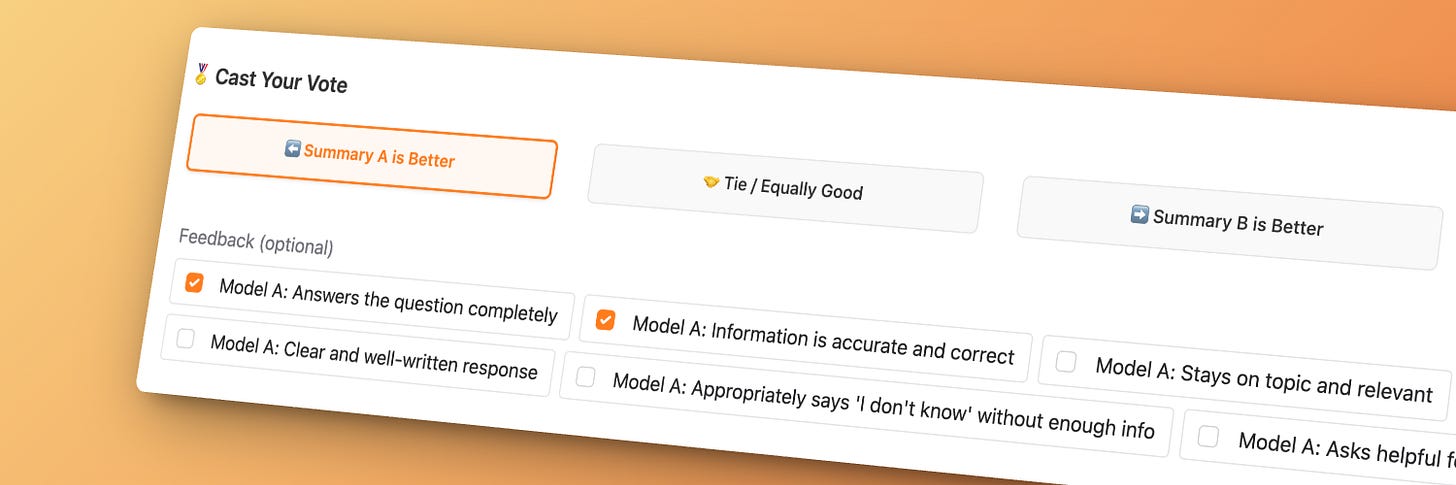

Granular feedback options: Users can indicate not just which response is better, but specifically why - whether it's completeness, accuracy, relevance, writing quality, or appropriate refusal.

This controlled environment focuses evaluation on the qualities that matter most for RAG systems while making the human judgment process easier.

Models We Include

We curated a diverse set of open-source small language models, all under 5B parameters, from leading AI labs. The arena includes models like Llama-3.2-1b/3b, Gemma-2/3 models, Qwen2.5 variants, Phi-4-mini, and others representing different architectures and training approaches. We also added our own research model checkpoint (codename "icecream-3b") to benchmark our work directly against established models.

Elo Rating System

Rather than simple win-loss counting, we use an Elo rating system similar to chess rankings. This approach offers several advantages:

Models gain more points for beating stronger opponents than weaker ones

Ratings naturally stabilize as models accumulate more comparisons

We can calculate statistical confidence intervals that narrow over time

The system is robust to imbalanced comparison counts between models

All models start at 1500 points and exchange rating points after each comparison. The magnitude of change depends on both the outcome and the current rating difference between the models.

What We Collect

Beyond basic vote counts, we collect:

Structured feedback categories on why users preferred certain responses (completeness, accuracy, relevance, etc.)

Query-context-response triplets with comparative human judgments

Model performance patterns across different question types and domains

This data directly feeds into improving our open-source RED-Flow evaluation framework by helping align automated metrics with human preferences, especially for cases where models must recognize when context is insufficient. We're also researching how presentation style impacts user perception in RAG applications - investigating whether structural formatting, citation patterns, and response organization significantly affect user satisfaction beyond factual accuracy.

Next Steps - More Comprehensive Test Sets

We're developing more structured evaluation scenarios inspired by our work on differentiated question types:

Quick Q&A Evaluation: Straightforward fact-based questions where answers are directly present in context

Boolean Questions: Yes/No questions testing binary decision-making based on context

Complex Q&A: Multi-step reasoning, multiple-choice questions, and causal inference from complex contexts. For these more challenging questions, we'll include models with explicit thinking capabilities

We'll also expand into:

Multimodal content: Evaluating how models process and reason about tables, images, and formulas

Multilingual expansion: Testing performance across multiple languages

Building Better Local RAG Together

The SLM RAG Arena represents the next step in our effort to empower developers to build personalized, private local RAG systems. Rather than just identifying which existing small models perform best, our goal is to understand the direction for improving SLMs so we can collectively build systems that match cloud-based solutions without requiring constant connectivity or massive computing resources.

The arena and leaderboard are available now on HuggingFace 👉 SLM RAG Arena

_____

Blog from Aizip language model team:

Haoguang (Kai) Cai, Oliver Dong